AI-ready data center construction | hyperscale & modern infrastructure

Build the future with AI data center infrastructure. Explore hyperscale projects, AI-ready data centers, and modern data center construction solutions.

Every time an AI model answers a question or generates an image, there’s a massive computational system working behind the scenes. That system lives inside an AI data center — a new breed of facility purpose-built to train, deploy, and deliver artificial intelligence workloads.

These aren’t the same data centers that once hosted websites or stored business files. They’re high-performance compute (HPC) environments, engineered for extraordinary power density, advanced cooling, and ultra-fast networking.

The rapid global expansion of AI data center infrastructure is reshaping the entire construction industry. What used to be a niche form of IT real estate is now a trillion-dollar race to build the digital foundation for intelligent systems.

Today, we will know in detail about data center construction, AI data center infrastructure, few modern hyperscale data center projects and much more.

What is an AI data center?

An AI data center is a specialized facility designed to support the training, deployment, and operation of artificial intelligence models.

Unlike conventional cloud data centers — which focus on general computing, storage, and networking — AI-ready data centers are optimized for massive parallel processing.

They rely heavily on GPU clusters, tensor processing units (TPUs), and custom AI accelerators that handle the immense matrix computations required for deep learning.

The technical core of AI data centers

AI data centers are often described as “factories of intelligence.” Inside these massive facilities, data flows through networks of GPUs and storage arrays at incredible speeds, enabling machine learning models to train, adapt, and respond in real time.

At the engineering level, an AI data center is a balance between power delivery, thermal control, and high-speed connectivity — all designed to keep compute clusters running at full tilt without overheating or slowing down.

Let’s break down the key components that define AI data center infrastructure today.

1. Compute Power

The primary difference between traditional and AI data centers is compute density. Instead of general-purpose CPUs, AI centers rely on:

GPUs: Designed for parallel computation, crucial for AI training and inference.

TPUs: Custom chips (like Google’s TPUv5) specialized for large-scale neural network operations.

ASICs: Purpose-built processors optimized for edge AI and inference workloads.

Each GPU server rack can draw over 80–120 kilowatts (kW), compared to 10–15 kW in a typical enterprise rack. This creates a new set of design imperatives for AI data center infrastructure construction.

To manage such density, builders must design reinforced flooring, power trunking systems, and custom rack-level cooling manifolds.

2. Power Supply and Distribution

AI models don’t sleep — they train continuously for days or weeks. That means any power interruption can derail millions of dollars in computation.

Modern AI infrastructure construction now includes:

Dedicated substations on-site (up to 400 kV connections).

Uninterruptible power supplies (UPS) with lithium-ion battery arrays.

On-site backup generation using diesel, natural gas, or hydrogen cells.

Power usage effectiveness (PUE) metrics below 1.2 in advanced designs.

Many hyperscale operators integrate renewable energy microgrids, allowing AI-ready data centers to offset carbon emissions while ensuring reliability.

For example, Microsoft’s new AI data center in Iowa runs primarily on wind energy, supplying 100% renewable power to thousands of GPUs operating 24/7.

3. Cooling

If power is the heart, cooling is the circulatory system. GPU clusters can generate heat densities exceeding 50 kW per rack — levels that would destroy conventional air-cooled environments.

That’s why AI infrastructure construction is now dominated by liquid and immersion cooling technologies:

Direct-to-chip cooling: Coolant runs directly through cold plates mounted to GPU or CPU chips.

Immersion cooling: Servers are submerged in dielectric fluid, enabling efficient heat transfer.

Rear-door heat exchangers: Liquid-cooled doors extract heat from exhaust air at the rack level.

According to Uptime Institute, liquid cooling adoption has tripled since 2020, driven primarily by AI training workloads.

Cooling efficiency is about sustainability. Every percentage point of efficiency can save millions of kilowatt-hours per year, lowering both cost and carbon footprint.

4. Networking

AI workloads require massive data throughput between compute nodes. Training a model like GPT or Gemini involves thousands of GPUs exchanging parameters hundreds of times per second.

Traditional Ethernet networks cannot handle this load, so AI-ready data centers deploy:

InfiniBand networking, offering up to 800 Gbps interconnect speeds.

Optical fiber backbones for long-range, high-speed transmission.

Custom switching fabrics designed to reduce latency to microseconds.

This enables the “distributed brain” effect — allowing GPUs across entire data halls or even across continents to function as one synchronized system.

5. Storage and Data Flow

AI training depends on data — vast amounts of it. An average generative model may process petabytes of information during training.

AI data center infrastructure construction now integrates multi-tier storage systems to handle different stages of the AI lifecycle:

High-speed NVMe flash arrays for training datasets.

Object storage clusters for long-term model retention.

Data caching layers to accelerate repetitive reads/writes.

As data flows continuously through these systems, energy-efficient design becomes critical. Every watt saved in storage optimization is a watt freed for compute.

Put simply, AI-ready data centers are not just about storing data — they are about processing intelligence at scale.

Why AI is reshaping data center construction?

The construction industry is undergoing one of its biggest transformations in decades because of AI. The new generation of data center construction projects is more complex, energy-intensive, and capital-heavy than anything before.

In traditional data centers, thousands of CPU-based servers handled user transactions or cloud storage. But AI workloads — especially LLMs — demand interconnected GPU clusters capable of running hundreds of trillions of operations per second.

This new architecture changes everything about how a facility is built:

Thicker power lines and redundant substations for consistent supply.

High-performance cooling loops integrated into the floor and ceiling.

Reinforced structures to handle heavy GPU racks and liquid systems.

Where a legacy data center might operate comfortably at 5 megawatts (MW), large AI facilities can exceed 100–300 MW of capacity — a scale comparable to entire industrial plants.

How AI is transforming the construction process?

The design and build of AI data center infrastructure itself is becoming AI-assisted. Machine learning tools now analyze design simulations, predict airflow, and optimize equipment placement to reduce heat pockets and energy waste.

Generative design software evaluates thousands of layout permutations for maximum space utilization.

Digital twins — virtual replicas of physical sites — enable predictive maintenance and operational modeling.

Robotic construction equipment increases precision in complex builds, especially for modular and prefabricated designs.

These tools accelerate delivery and reduce human error, marking a new chapter in AI infrastructure construction where artificial intelligence literally builds its own home.

The surge of hyperscale data center projects?

The demand for AI compute has sparked an unprecedented wave of hyperscale data center projects. These massive facilities — often spanning over one million square feet — are being built by companies like Google, Microsoft, Amazon, Meta, and NVIDIA to handle the next generation of AI workloads.

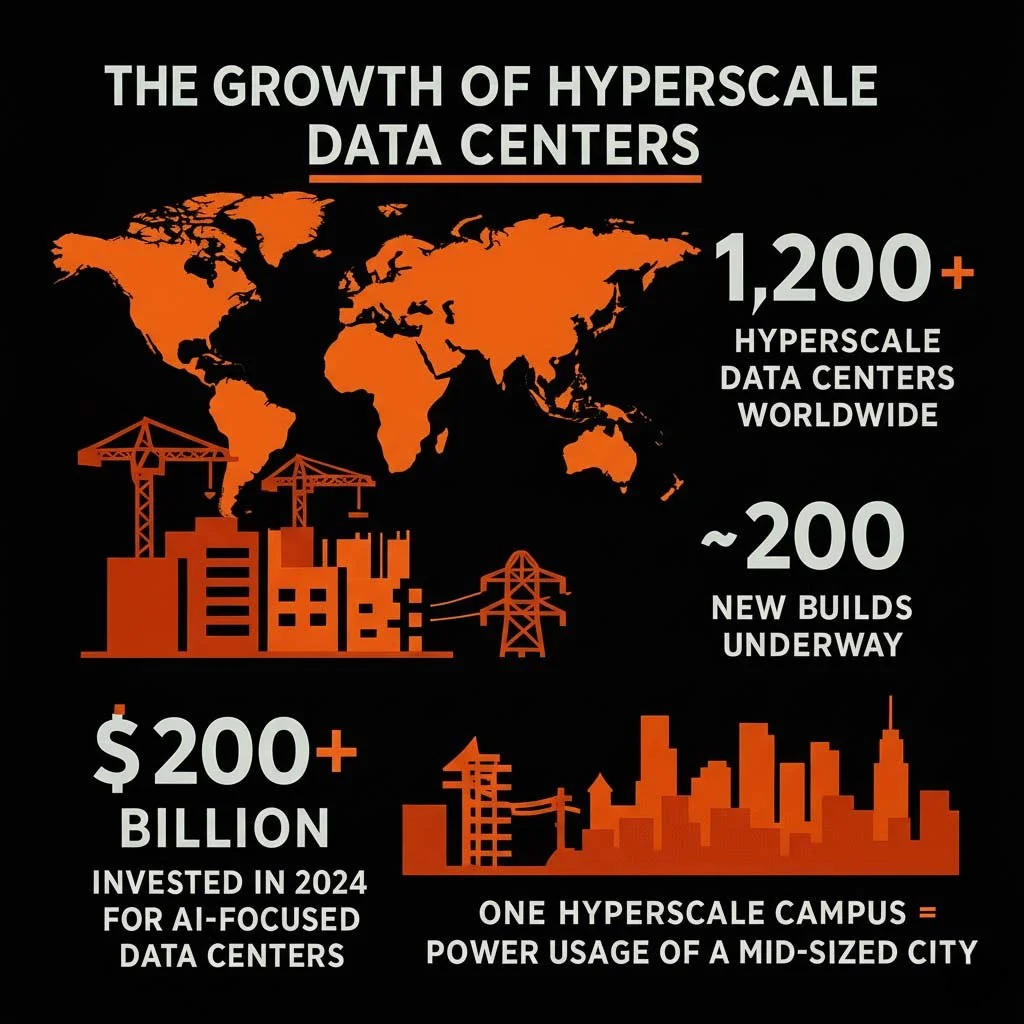

According to Synergy Research, there are now over 1,200 hyperscale data centers worldwide, with nearly 200 new builds underway.

In 2024 alone, cloud providers announced $200+ billion in new AI data center infrastructure investments focused on AI and advanced compute.

The average hyperscale campus consumes as much energy as a mid-sized city, pushing developers to innovate in renewable energy sourcing.

The hyperscale formula

What sets hyperscale data center projects apart is their focus on scale, speed, and sustainability:

Scale: Designed to host hundreds of thousands of GPUs and AI servers.

Speed: Built using modular construction and prefabricated units to deploy capacity in months instead of years.

Sustainability: Integrated with renewable power (solar, wind, or hydro) and carbon-free cooling technologies.

Each new hyperscale project is a redefinition of what a data center can be — a living, evolving machine that grows with global AI demand.

Top hyperscale data center projects

The modern data center construction combines massive GPU clusters, renewable energy systems, and innovative cooling technologies to deliver unparalleled performance and sustainability.

Here are four of the most significant hyperscale AI data center projects leading this global transformation — built by Microsoft, Google, Meta, and NVIDIA:

1. Microsoft “Athena” Project — Iowa, USA

The Microsoft “Athena” Project in Iowa is one of the most advanced hyperscale data center projects in the United States. With an estimated capacity of 120 MW, it hosts powerful GPU clusters that drive the Azure OpenAI Service and large-scale model training.

The facility is built with advanced liquid cooling, powered entirely by renewable wind energy, and designed for modular expansion — allowing seamless scaling as AI demands grow.

3. Meta’s Altoona Campus — Iowa, USA

Meta’s Altoona data center campus spans more than 5 million square feet, developed in multiple phases to accommodate ever-growing computational needs. It focuses on AI-driven operations that optimize workloads in real time for maximum efficiency.

Advanced AI models manage internal systems to reduce energy waste and minimize cooling inefficiencies, making Altoona a leading example of intelligent AI data center infrastructure management.

2. Google TPUv5 Data Centers — Oregon & Finland

The Microsoft “Athena” Project in Iowa is one of the most advanced hyperscale data center projects in the United States. With an estimated capacity of 120 MW, it hosts powerful GPU clusters that drive the Azure OpenAI Service and large-scale model training.

The facility is built with advanced liquid cooling, powered entirely by renewable wind energy, and designed for modular expansion — allowing seamless scaling as AI demands grow.

4. NVIDIA Earth-2 Supercomputer Data Center — Sweden

The NVIDIA Earth-2 facility in Sweden is built to simulate global climate models using the power of AI. Fueled by 100% renewable energy, it employs waterless cooling systems and features DGX SuperPOD clusters delivering more than 275 petaflops of compute performance.

Together, these hyperscale data center projects highlight the global transformation toward AI data center infrastructure — combining enormous computational power, cutting-edge engineering, and responsible energy use to build the backbone of the intelligent digital era.

Sustainability strategies for hyperScale data center projects

AI data centers are projected to consume up to 10% of global electricity by 2030, according to the International Energy Agency (IEA).

That’s forcing the industry to evolve faster than ever before.

Renewable Energy Contracts

Hyperscale operators now sign 20-year power. purchase agreements (PPAs) with solar and wind providers to lock in clean supply.

Heat Recovery Systems

In Finland and Denmark, AI data centers redirect excess heat to warm thousands of homes.

On-Site Generation

Facilities are integrating microgrids, battery energy storage systems (BESS), and even hydrogen fuel cells for redundancy.

AI-Driven Energy Optimization

Machine learning models control airflow, cooling, and voltage dynamically, reducing waste by 10–20%.

In short, modern data center construction now intertwines architecture, sustainability, and intelligence. Each watt saved extends the capability of AI itself.

Economic impact of AI data center

AI data centers are not just physical assets — they’re economic multipliers.

Each hyperscale facility can:

Create thousands of construction jobs.

Inject hundreds of millions into local economies.

Catalyze nearby industries (chip manufacturing, renewable energy, fiber networks).

For example:

Microsoft’s recent $10 billion AI data center expansion in the U.S. is projected to generate 50,000 indirect jobs.

Google’s European AI data center infrastructure investments have boosted GDP by over €3 billion since 2020.

As AI models expand, so does the demand for physical infrastructure. Every AI breakthrough — from medical imaging to autonomous driving — begins with construction crews laying concrete and fiber for compute power.

The role of modern data center construction firms

Building these next-generation facilities requires a new level of interdisciplinary expertise. The firms leading modern AI infrastructure construction combines traditional engineering with deep digital knowledge.

Modern data center construction blueprints now include:

Thermal modeling and fluid dynamics simulations for cooling optimization.

AI-powered monitoring systems that guide equipment placement and airflow.

Sustainable materials designed to minimize carbon footprints.

Companies like Turner Construction, DPR Construction, and Skanska have developed dedicated data center divisions that specialize in hyperscale and AI-driven builds.

The modular and automated future of construction

The scale and speed of AI infrastructure construction have forced developers to rethink the way these buildings are created.

Modular Construction

Instead of traditional site-built methods, many companies now use prefabricated modules — fully equipped units built off-site and assembled like building blocks.

Benefits include:

Faster deployment: Time to market reduced by up to 50%.

Scalability: Easy to expand in phases.

Consistency: Factory-built modules ensure uniform quality and efficiency.

This approach is ideal for hyperscale data center projects, where operators need to roll out capacity across continents in synchronized phases.

AI in Construction

AI itself is also transforming how facilities are built.

Autonomous drones scan construction sites for quality assurance.

Robotic arms install conduit and cabling with millimeter accuracy.

Predictive analytics monitor supply chain risks and project delays.

The result is a smarter, faster, safer construction process — where AI builds the infrastructure that enables more AI.

Next decade of data center construction

The 2020s will go down as the decade when modern data center construction became the backbone of artificial intelligence.

By 2030:

Over 2,000 hyperscale data center projects will be operational worldwide.

The majority of new builds will be AI-optimized from the ground up.

Sustainability regulations will require carbon-neutral operation by design.

The convergence of AI and construction is a fundamental reshaping of how we build the digital world.

Conclusion

Behind every AI breakthrough stands an army of engineers, builders, and designers working on AI data center infrastructure.

The global boom in modern data center construction and hyperscale data center projects is about redefining the limits of what humanity can build.

From AI-ready data centers in the U.S. to sustainable hyperscale campuses in Europe and Asia, we’re witnessing a new industrial revolution — one powered by intelligence, built with precision, and sustained by innovation.

In the years ahead, AI infrastructure construction will become the defining discipline of modern civilization. It’s about building the future of intelligence itself.